Pranjal’s ML algorithm wins him a Silver Award at SSEF 2025.

It is quite interesting that young secondary / high school students are introduced to research, a field traditionally reserved for PhD students and seasoned researchers. Around 350 finalist research works and projects in various scientific domains at SSEF 2025 is a good testament that students are responding to this very well.

My sincere thanks to all organisers and educators and wishing all the best to all participants.

Bengali as a Mother Tongue Language in Singapore

Soon after completing the buffet at Singapore Mathematical Society event, I had to rush off to attend another annual prize giving ceremony organized by the Bengali School, BLLS.

There are two Bengali Schools in Singapore – BLLS and BLCF. My daughter, Deepa, a student of NUS High School, attends BLLS that operates every Saturday for 5 hours to teach only Bengali language.

Today she got 1st prize for the 7th year in a row since Class 1. It is not say she is very good in Bengali language. However, whatever she learnt could not have been possible had Singapore Govt. not facilitate taking Bengali (along with many other languages) as a Mother Tongue till 12th standard.

Singapore is possibly the only country (as far as I know) outside our native places where one can take Bengali as a mother tongue and it counts as a valid/official subject.

Thank you Singapore for helping the small linguistic groups keeping alive their languages.

Thank you all teachers and others involved in running the school, who either take a token salary or volunteer for their love for the language.

SMPF 2023

Today we attended the Annual Prize Presentation Ceremony 2023 by Singapore Mathematical Society at the NUS High School Auditorium.

Students of Singapore who did well in Singapore Mathematics Projects Festival and Olympiads were awarded. It was attended by many distinguished guests including Professors from Department of Statistics and Data Science (including its Head of Department) at NUS, Department of Mathematics at NUS and other institutes.

My son, Pranjal, got a gold award in Singapore Mathematics Projects Festival (Junior Section). It is one of the two gold awards in this category in Singapore in 2023.

I was amazed at the quantity and quality of the projects. In the Math Olympiad too, Singapore is punching well above its weight, ranking 12th as a country this year.

Thank you all – Singapore Mathematical Society, Ministry of Education, as well as all teachers and mentors involved in training the young students and organizing the events.

Soon after completing the buffet, I had to rush off to attend another annual prize giving ceremony by the Bengali School, BLLS.

Moot Parliament Programme 2023

My Son, Pranjal’s 5-member team from NUS High School gets their bill “passed” in Singapore Moot Parliament (First Prize in Written Bill).

25 teams from Secondary 3 & 4 (class 9 & 10) from a number of secondary schools made it to the Parliament House on Thursday, 17th Aug 2023. While the 12-page bill from Pranjal’s team passed without debate, some others were contested and a parliamentary debate ensued in the presence of the Deputy Clerk of Parliament (Singapore), professors from NUS law school and other distinguished guests.

Gifted Education Branch of Ministry of Education (MOE), Singapore organizes it “to develop students’ interest in parliamentary legislation and develop active citizenry”.

Thank you MOE, NUS Faculty of Law, NUS High School (and other secondary schools), all lawyers and mentors who prepared the students for months on the intricacies of parliamentary legislation.

Singapore is a little dot in the heart of Asia that depends on others even for water. Devoid of natural resources, the only thing that Singapore is left with is her 5-million population. That leaves no option but to nurture them so that they are well-equipped to make the best use of Singapore’s strategic location for her survival and prosperity.

Young Technopreneur Challenge 2023

My daughter, Deepa’s team from NUS High won Young Technopreneur Challenge 2023.

30 teams from various secondary schools (sec 2 and sec 3 students) in Singapore came up with a number of STEM projects.

Deepa and 3 other members of her team are in sec 2 (class eight).

Apart from being champion, her team also won the Best Prototype award.

Each of the 4 members received a laptop, sponsored by HP.

Being champion also means they will be funded to take the project forward. It is about generating electricity from lifts.

Thank you JA (Junior Achievement) Singapore, sponsors HP and Southwest CDC, all mentors and all others who were involved in this.

Imbibing techno-entrepreneurial spirit among young minds is a great idea.

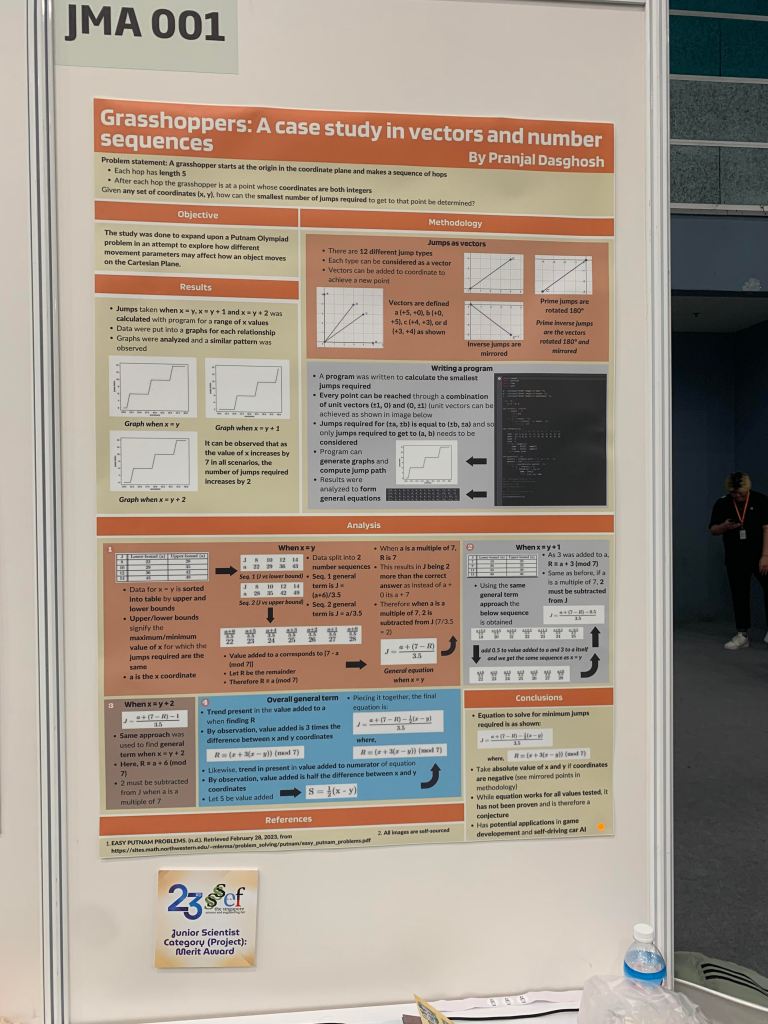

Grasshoppers: A Case Study Of Vectors And Number Sequences

My son, Pranjal’s Math project is in the final of SSEF Junior Scientist Category (only finalist in Math category)

SSEF stands for Singapore Science and Engineering Fair.

Pranjal is a secondary 3 (class 9) student of NUS High.

Update 1:

Pranjal’s project got merit award.

Among 30 finalists, 4 projects got distinction and 4 projects got merit award.

Update 2:

Pranjal further worked on his project since SSEF submission and gets gold from Singapore Mathematics Project Festival 2023 in Junior Section.

His work will be published in the upcoming volume of Mathematical Medley.

Johnson’s Algorithm

47th Friday Fun Session – 19th Jan 2018

We have seen why Dijkstra’s algorithm cannot work with negative edge and that we cannot trivially add a constant to each of the edge weights and make them non-negative to proceed further. It is where Johnson’s algorithm comes into play. It finds a special set of offset values to remove the negative edges (change the negative edge weights to non-negative edge weights) and now this transformed graph is all set to work with Dijkstra’s algorithm.

How Does Johnson’s Algorithm work?

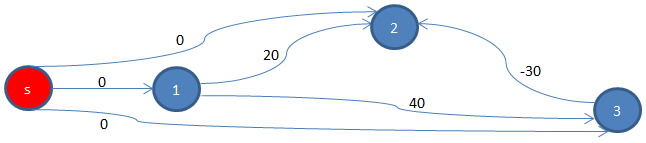

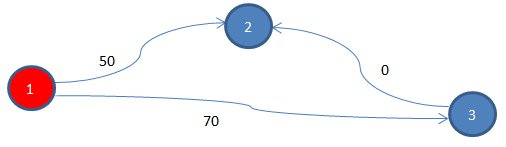

Johnson’s algorithm starts with a graph having negative edge(s). Let’s go through it using an example as shown below.

Add a New Node

It then adds a new vertex, let’s call it s, with edges starting from it and ending to each of the vertices of the existing graph, each having a cost of 0, as we have done earlier.

Apply Bellman-Ford

Then it applies Bellman-Ford, a Single Source Shortest Path (SSSP) algorithm that can work with a graph having negative edge(s). We will use s as the source, and find shortest path from it to all other vertices.

We also need to check whether a negative cycle exists, something that Bellman-Ford can detect. If it exists then we cannot proceed further as we cannot find shortest path in a graph with negative cycle. In our example graph, there is no negative cycle.

We find d[s, 1] = 0, d[s, 2] = -30, and d[s, 3] = 0 as shown below, using this code where d[s, t] indicates the shortest path from s to t.

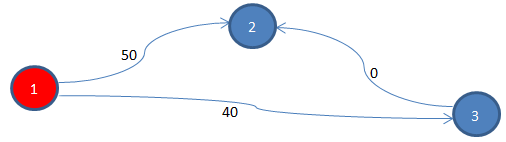

Adjust Original Edge Weights

Now using these shortest path costs, original edges will be updated using the formula: w’[u, v] = w[u, v] + d[s, u] – d[s, v]. Applying the same for the original 3 edges in the original graph, we find,

w’[1, 2] = w[1, 2] + d[s, 1] – d[s, 2] = 20 + 0 – (-30) = 50

w’[1, 3] = w[1, 3] + d[s, 1] – d[s, 3] = 40 + 0 – 0 = 40

w’[3, 2] = w[3, 2] + d[s, 3] – d[s, 2] = (-30) + 0 – (-30) = 0

Now that we have adjusted the original edge costs, the new (cost) adjusted graph (without s and associated edges) does not have any more negative edge. Let’s see how the cost adjusted graph looks like.

Apply Dijkstra

With this non-negative edge graph we can proceed with Dijkstra’s algorithm. For each shortest path found in this graph from u to v, we have to adjust back the cost by subtracting d[s, u] – d[s, v] from it.

Is the Shortest Path Still the Same?

We are adjusting edge cost to remove negative edge. That way, we are changing the graph to some extent. However, while doing so we must preserve certain things of it. What was the cheapest cost in the original graph must still remain the cheapest path in the transformed graph. Let’s first verify whether that is indeed the case.

We will first look at the original graph (before edge cost adjustment). Let’s take a certain source destination pair (1, 2). There are two paths to reach from vertex 1 to vertex 2.

The first one (original):

d1[1, 2]

= from vertex 1 to vertex 2 directly using edge 1->2

= 20.

The second one (original):

d2[1, 2]

= from vertex 1 to 3 and then from 3 to 2

= 40 + (-30)

= 10.

Now let’s see how the costs of the same two paths change in the new cost adjusted graph.

The first one (cost adjusted):

d’1[1, 2]

= from vertex 1 to vertex 2 directly using edge 1->2

= 50.

The second one (cost adjusted):

d’2[1, 2]

= from vertex 1 to 3 and then from 3 to 2

= 40 + 0

= 40.

We see both the path costs have increased by 30, a constant. So what was earlier the shortest from vertex 1 to vertex 2, in the original graph, which was the second path, using two edges: edge 1->3 and edge 3->2, still remains the shortest path in the cost adjusted graph.

So how did that happen? Let’s have a closer look as to how the path cost changes.

The first one (cost adjusted):

d’1[1, 2]

= w’[1, 2]

= w[1, 2] + d[s, 1] – d[s, 2]

= d1[1, 2] + d[s, 1] – d[s, 2]

The second one (cost adjusted):

d’2[1, 2]

= w’[1, 3] + w’[3, 2]

= w[1, 3] + d[s, 1] – d[s, 3] + w[3, 2] + d[s, 3] – d[s, 2]

= w[1, 3] + d[s, 1] + w[3, 2] – d[s, 2]

= w[1, 3] + w[3, 2] + d[s, 1] – d[s, 2]

= d2[1, 2] + d[s, 1] – d[s, 2]

So we see both the paths, with a certain source u and a certain destination v, have increased with a constant cost = d[s, u] – d[s, v], where s is the extra node that we added before applying Bellman-Ford algorithm.

We can easily find, no matter how many paths are present between a certain source s and a certain destination v, and no matter how many edges each of those paths uses, each of them would be adjusted by adding a constant cost = d[s, u] – d[s, v] to it. And hence, the shortest path in the original graph remains the shortest path in the new cost adjusted, non-negative edge graph.

Let’s consider a path that goes through 5 vertices: u, x1, x2, x3, and v.

In the cost adjusted graph the cost

d’[u, v]

= w’[u, x1] + w’[x1, x2] + w’[x2, x3] + w’[x3, v]

= w[u, x1] + d[s, u] – d[s, x1] + w[x1, x2] + d[s, x1] – d[s, x2] + w[x2, x3] + d[s, x2] – d[s, x3] + w[x3, v] + d[s, x3] – d[s, v]

= w[u, x1] + d[s, u] + w[x1, x2] + w[x2, x3] + w[x3, v] – d[s, v]

= w[u, x1] + w[x1, x2] + w[x2, x3] + w[x3, v] + d[s, u] – d[s, v]

= d[u, v] + d[s, u] – d[s, v]

By generalizing the above, we see that a constant cost d[s, u] – d[s, v] is getting added to all paths from u to v.

Are all Negative Edge Removed?

The second thing that we need to prove is: no longer there exists a negative edge in the adjusted graph. After applying Bellman-Ford, we computed the shortest paths from source s. Let’s assume, d[s, u] and d[s, v] are the shortest paths from s to any two vertices, u and v, respectively. In that case, we can say,

d[s, v] <= d[s, u] + w[u, v]

=> 0 <= d[s, u] + w[u, v] – d[s, v]

=> 0 <= w[u, v] + d[s, u] – d[s, v]

=> 0 <= w’[u, v]

We prove that the new edge cost, w’[u, v] is always non-negative.

Why Would We Use Johnson’s algorithm?

So here with Johnson’s algorithm, first we use Bellman-Ford to get a set of values; using which we transform the graph with negative edge to a graph with all non-negative edges so that we can apply Dijkstra’s algorithm.

But why would anyone want to do that? After all, both Bellman-Ford and Dijkstra are SSSP algorithms. What is the point of using one SSSP algorithm to transform a graph so that another SSSP algorithm can be used on the transformed graph?

Dijkstra’s Algorithm is Faster

Well, the reason being, the latter SSSP algorithm, namely Dijkstra’s, is much faster than Bellman-Ford. So, if we need to find shortest paths many times, then it is better that first we apply a bit more expensive SSSP alogorithm – Bellman-Ford to get the graph ready to work with Dijkstra’s algorithm. Then we execute much cheaper Dijkstra’s algorithm on this transformed graph, as many times as we want – later.

Sparse Graph

But in such a situation is it not better to run an ALL-Pairs Shortest Paths (APSP) algorithm like Floyd-Warshall? After all, Floyd-Warshall can compute APSP at a cost of O(V3) while Bellman-Ford costs O(|V| * |E|) that can shoot up to O(V3), when E=|V|2 for a dense graph.

Yes, that is correct. For a dense graph Johnson’s algorithm won’t possibly be useful. Johnson’s algorithm is preferable for a sparse graph when Bellman-Ford is reasonably efficient to work with it.

Currency Arbitrage with Increasing Rate

13th JLTi Code Jam – Mar 2018

After adding new node to Floyd-Warshall algorithm incrementally and dealing with decreasing rate (of an existing currency pair), the next logical thing is how to deal with increasing rate (of an existing currency pair). By an existing currency pair we mean both the currencies were already present and there was already a rate between the two.

Just like before, given that we have an existing best path cost matrix, when a rate between two currencies increases what shall we do? Once again, we have two options: i) re-compute the cost matrix from the scratch, using Floyd-Warshall, at a cost O(V3) and ii) update the already computed cost matrix using some partial computations. This problem expects a solution using the second option.

Input:

1 USD = 1.380 SGD

1 SGD = 3.080 MYR

1 MYR = 15.120 INR

1 INR = 0.012 GBP

1 GBP = 1.29 USD

I CAD = 0.57 GBP

1 GBP = 1.30 USD

Explanation: We have 7 inputs here. Each time an input is given, we need to present an output and hence, we have 7 lines of output.

The first 6 inputs do not result in any arbitrage; we output “No luck here”.

At 7th input, we see the existing rate from GBP to USD, which was 1.29 last time, has changed (increased) to 1.30 now. With this new rate in effect, an arbitrage comes into picture now. We need to output the path that creates that arbitrage.

Since in this problem, we are dealing with only increasing rate, in input, between two currencies, rate will only increase. For example, an input like 1 GBP = 1.25 USD will never appear.

When multiple arbitrages exist, printing any one will do.

Output:

No luck here

No luck here

No luck here

No luck here

No luck here

No luck here

USD -> SGD -> MYR -> INR -> GBP -> USD

Task: For each line of input, for each new vertex, incrementally adjust/add shortest paths at a cost (time) of O(|V|2), detect the presence of an arbitrage and output as specified. Use existing solution for this.

If input contains a rate that has increased since last time, accommodate that change in the best path cost matrix using some partial computations, instead of computing the whole matrix from the scratch.

Collation in MS SQL Server

53rd Friday Fun Session – 9th Mar 2018

What Does Collation Do in SQL Server?

Collation in SQL server does two things:

- Storage: specifies the character set/code page used to store non-Unicode data

- Compare and sort: determines how to compare and sort all textual data

No Bearing on Code Page of Unicode Data

Code page as specified in a collation is applicable only for non-Unicode characters. Unicode data is stored using UCS-2/UTF-16 character set (UCS-2 is a predecessor of UTF-16), code page 0, irrespective of what collation is in use. So collation has no bearing on the storage of nvarchar, nchar etc. type (Unicode) data.

Many Code Pages

Apart from code page 0 that is used for Unicode data, there are 16 other code pages for storing non-Unicode data.

SELECT name, COLLATIONPROPERTY(name, 'CodePage') AS [Code Page], description FROM ::fn_helpcollations()

Each of the around 3885 collations, as I can see in SQL Server 2012, uses one of these 17 code pages. As said, even when a collation uses one of those 16 non-Unicode code pages, for Unicode data (nvarchar etc.), code page 0 will always be used. Code page for Unicode data is not configurable. However, around 510 collations use code page 0. For them, even for non-Unicode data like varchar, code page 0 will be used.

Two Parts of a Collation Name

A collation name looks like SQL_Latin1_General_CP1_CI_AS. The first part indicates the (language and) code page. The later part CI, AS etc. indicates compare/sort rules.

No Bearing on Compare/Sort for Non-textual Data

Collation affects only textual data as far as comparing/sorting is concerned. Non-textual data like integer, date, bool, decimal etc. are not affected.

Options Associated with Collation

All the options as listed below dictate sorting preferences.

- Case-sensitive (_CS) – ABC equals abc or not.

- Accent-sensitive (_AS) – ‘a’ equals ‘ấ’ or not.

- Kana-sensitive (_KS) – Japanese kana characters (Hiragana and Katakana) sensitivity

- Width-sensitive (_WS) – full-width and half-width characters sensitivity

- Variation-selector-sensitive (_VSS) – related to variation selector of Japanese collations.

Collation Sets

There are many collations that can be used in SQL Server. They are broadly divided into three categories:

- SQL collations

- Windows collations

- Binary collations

SQL Collations

SQL collations use different algorithms for comparing Unicode and non-Unicode data. Let’s us understand using an example.

Suppose SqlDb database, as used for the below example, using SQL_Latin1_General_CP1_CI_AS (CP1 stands for code page). NameU column uses nvarchar (Unicode) while NameNU column uses varchar. Sorting on them produce two different sets of results as shown below.

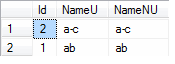

SELECT [Id], [NameU], [NameNU] FROM [SqlDb].[dbo].[Test1] ORDER BY [NameU]

ab comes before a-c when sorting is done based on the Unicode column.

SELECT [Id], [NameU], [NameNU] FROM [SqlDb].[dbo].[Test1] ORDER BY [NameNU]

On the other hand a-c comes before ab when sorting is done based on the non-Unicode column.

Windows Collations

Windows collation, introduced in SQL Server 2008, uses the same algorithm for comparing both Unicode and non-Unicode data.

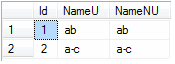

SqlDbU database as used below is using Windows collation Latin1_General_CI_AS. Using the same table definition and same queries as earlier, we see that both result sets are the same unlike earlier.

SELECT [Id], [NameU], [NameNU] FROM [SqlDbU].[dbo].[Test1] ORDER BY [NameNU]

ab comes before a-c when sorting is done based on the Unicode column. So we see sorting results on Unicode data remain the same in both SQL and Windows collation.

SELECT [Id], [NameU], [NameNU] FROM [SqlDbU].[dbo].[Test1] ORDER BY [NameU]

Once again, ab comes before a-c when sorting is done based on the non-Unicode column.

Consistent Sorting Behavior across Database and Application

One more good thing about Windows collation is that, if it is used then sorting behavior is consistent with other applications running in a computer using the same local settings.

After all, “Windows collations are collations defined for SQL Server to support the Windows system locales available for the operating system on which SQL Server instances are installed.”

For new SQL Server installation Windows collation is recommended.

Difference at a Glance

Difference between a SQL collation and its equivalent Windows collation can also be seen from the description column of the below query result.

SELECT

name,

COLLATIONPROPERTY(name, 'CodePage') AS [Code Page],

description

FROM ::fn_helpcollations()

WHERE name IN ('Latin1_General_CI_AS', 'SQL_Latin1_General_CP1_CI_AS')

![]()

As we see, (inside SQL Server) the only difference being how the sort/compare would happen for non-Unicode data.

Comparing/Sorting Unicode

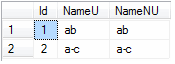

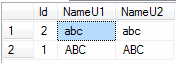

Comparing/sorting results for Unicode data remain the same in equivalent (language and option being the same) SQL and Windows collation. But they will vary when options are different. In the below example, Both NameU1 and NameU2 columns are using nvarchar (Unicode) data type. But they are using two different collations having different options – the first is using a case-sensitive collation while the latter is using a case-insensitive one. Output will be based on collation option and hence they will differ.

SELECT [Id], [NameU1] -- uses SQL_Latin1_General_CP1_CS_AS, [NameU2] -- uses SQL_Latin1_General_CP1_CI_AS FROM [AbcSU].[dbo].[Test1] ORDER BY [NameU2]

If we ORDER BY column NameU1 that is using a case-sensitive collation, we see the below result.

If we ORDER BY column NameU2 that is using a case-insensitive collation, we see the below result (following the same order as the data inserted into the table).

How to Set Collations

Collations can be set at server, database, and column level. Apart from that, it can be used in an expression to resolve two different collations.

Server Collation

There is a server level collation. Once set during installation, changing it would require dropping all user databases first (after generating database creation script, export data etc.), rebuilding master database etc., recreate the user database and import the data back.

Database Collation

By default, when a user database is created, it inherits server’s collation. However, it can specify its own collation as well. That way, each database can have its own collation. Database collation is the default for all string columns, temporary objects, variable names and other strings in the database. We cannot change the collation for system databases.

Once a database is created, collation for it can be further changed. However, we need to take care as to how the possible code page change would affect the existing data. Also, how the option changes, if any, would produce different query/join result.

Column Level Collation

Down the line, collation can be specified at column level (of a table). Same concerns, as to how the existing data would behave, have to be addressed.

Expression Level Collation

Collation can be specified at expression level as well – for example, to join two columns belonging to two different collations that SQL Server would otherwise complain.

Changing Collation Changes Meaning of Underlying Data

If collation is changed for a column/database, underlying code page might also change. If it differs, the new collation might render an existing char as something different in the new collation. For example, a character represented by code 100 remains the same at storage – still 100, with changing collation, but the mapped char in the new collation might be different.

For Unicode data, output/mapping remains the same. After all, there is just one code base for them.

As far as compare/sort is concerned, some of the things might change. For example, result of a query that uses a sort on a textual column may change if one of the collation options, say case-sensitivity changes. The same might affect the cardinality of a sort result. A sort result that was earlier producing a certain number of rows can produce more or less rows now.

Safe Collation Change

However, as far as changing a SQL collation to a Windows collation (or vice versa) is concerned, as long both the collation options remain the same and if the database is using only Unicode data (nvarchar etc.), it is quite safe. The below query can be used to find what all data types are used in the database table (and view) columns.

SELECT *--distinct(DATA_TYPE) FROM INFORMATION_SCHEMA.COLUMNS WHERE DATA_TYPE = 'varchar'

Temp Table Issues

One particularly common problem that arises from the difference in collation is to deal with temp tables. When collation of a database varies from its server’s collation, the temporary tables it creates use a different collation (server’s collation) from it. After all, temp tables are created in tempdb database and this system database follows the server’s collation. Temp table with a different collation than the database that created it works fine on its own. However, if say a join (on textual column) is required between that temp table and a table in the user database, and that is often the case, then SQL Server would complain as the collations of the two columns are different.

To avoid this issue, when temp table is defined, it is safe to specify the right (same as the database creating it with which it would do a join later) collation, for its textual columns.

Address nvarchar(10) COLLATE Latin1_General_CI_AS NULL;

Alternatively, while joining two columns belonging to different collation, we can specify what collation should be used (collation in expression).

Suppose, #T1 is using Windows collation Latin1_General_CI_AS while T2 is using SQL collation SQL_Latin1_General_CI_AS. If we want the join to take place using SQL collation then we will use the below query.

SELECT * FROM T1 INNER JOIN T2 ON #T1.field COLLATE SQL_Latin1_General_CI_AS = T2.field

Dijkstra’s Problem with Negative Edge

46th Friday Fun Session – 12th Jan 2018

Dijkstra’s algorithm cannot work with negative edge. Also, we cannot trivially add a constant to each of the edge weights and make them non-negative to proceed further.

Why Does Dijkstra’s Algorithm not Work with Negative Edge?

In the above figure, we are trying to get shortest paths from source node 1 to all other nodes (node 2 and node 3). Since Dijkstra’s algorithm works by employing a greedy process, it outputs 20 as the shortest path cost to node 2.

As we can see, from node 1, we can go to two nodes – node 2 and node 3, at a cost of 20 and 40 respectively. Hence, going to node 2 is cheaper. And that is why, it outputs 20 to be the cheapest cost to reach node 2.

However, we know that the cheapest cost to reach node 2 is through node 3. And the associated cost is: 40 + (-30) = 10. So Dijkstra’s algorithm gets it wrong. It gets it wrong because it cannot foresee that later, a negative edge can bring down the total cost to below 20.

If we carefully observe, we see that the wrong calculation by Dijkstra’s algorithm happens due to the negative edge. Had cost from node 3 to node 2 not been negative, it could never bring down the total cost to lower than 20, after getting added to 40.

Why Does Adding a Constant Cost to Each Edge not Work?

Now that we realize, Dijkstra’s algorithm fails due to the negative edge from node 3 to node 2, having the value -30, we might be tempted to add 30 to each of the edges. We might think, this way we can remove the negative edge. And doing so would be fair; after all, we are adding the same value to each of the edges. Let’s do it and see what happens.

After updating the edge costs, the graph looks as shown above. So what is the cheapest path from node 1 to node 3 now?

Well, now the cheapest cost is 50, which uses the direct edge from node 1 to node 2. But this is not supposed to be the cheapest path, right? The cheapest path was node 1 -> node 3 -> node 2, before we adjusted the edge cost. Adjusting edge cost should not change the graph. It must not change the cheapest path, right?

So why does that happen? Well, if we observe, we find that path node 1 -> node 3 -> node 2 uses two edges/segments – node 1 to node 3 and node 3 to node 2. On the other hand, path node 1 -> node 2 uses just one edge/segment. The way we have updated the edge cost – adding a constant to each path segment – is not fair to a path using more path segments. For the path that uses two path segments, which was originally the cheapest path, we have added the constant 30 twice. On the other hand, for the path that uses just one path segment, we have added 30 only once. That way, we are unfair to the path using more path segments.

We must add a constant to each of the paths, not to each of the path segments.

Solution

Johnson’s algorithm does this – add a constant cost to each path with a certain source s to a certain target t. It does so, by finding a special set of offset values to remove the negative edges from a graph. Once that is done Dijkstra’s algorithm can work. But that works in absence of a negative cycle in the graph.